Program

A workshop partially funded by the DesCartes Program from CNRS@CREATE.

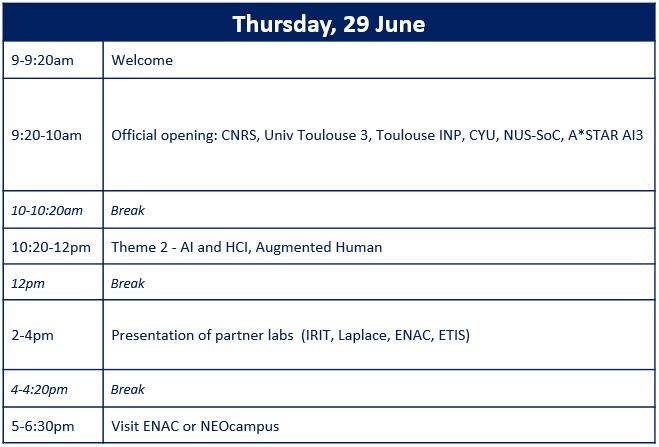

June 29th at IRIT lab (Univ. Toulouse 3 campus)

Online meeting room: Click here

https://cnrs.zoom.us/j/95241428368?pwd=d2VtUUh2MEN2SG90QTJOZm5QKytIZz09

ID de réunion : 952 4142 8368

Code secret : z6FDe0

Onsite meeting: IRIT Auditorium, IRIT, Cr Rose Dieng-Kuntz, 31400 Toulouse

Google maps: https://goo.gl/maps/7VJBPZGTdgWW7Ceg9?coh=178572&entry=tt

How to get there:

Ligne B to Metro Université P. Sabatier /

https://goo.gl/maps/Tyct6fCgNDYrxo8b7

Morning sessions

9am to 10am – Welcome session

- Welcome by Prof J.M Pierson, IRIT director

- Official opening :

- Dr. Su Yi, Executive Director A*STAR AI3 (online)

- Prof. Tan Kian Lee, Dean of NUS-School of Computing (online)

- Prof. Iryna Andriyanova, Vice-President for Research, CYU

- Prof Pascal Maussion, Vice-President for the International Affairs, Toulouse INP

- Prof. Jean-Pierre Jessel, Vice-President for Research, Univ Toulouse 3

- Dr. Michel Daydé, Deputy Director CNRS-INS2I

- Dr. Su Yi, Executive Director A*STAR AI3 (online)

- Introduction to IPAL and SinFra, C. Jouffrais, IPAL director

- Outline of the SinFra 2023 program, C. Chaux, IPAL interim director

10am to 10:20am – Break

10:20am to 12pm – Theme 2 – HCI & AI – Augmented Human

Session chair: Wei Tsang (NUS) – Introduction to theme 2

5 scientific talks (20 min each) by:

- Lim Joo Hwee (A*STAR, online): “AGI: Challenges and Opportunities”

Generative AI is advancing very rapidly, showing great promises in many aspects of AI. Does it mean we will have AGI soon? What challenges are left for AI researchers, or do we still have opportunities to contribute beyond current (multimodal) LLM? In this talk, we will also have a quick review on Intelligence, human-like or not.

- Sun Ying (A*STAR, online): “Towards Long-term Video Prediction via Multimodal Conditioning “

This talk will discuss the recent advances in video prediction, the remaining challenges, and a potential direction for future research.

- Frederic Dehais (ISAE-Supaero): “Pushing BCI out of the lab to improve human machine symbiosis”

The Steady-State Visually Evoked Potentials (SSVEP) refers to the rhythmic activity observed in the occipital cortical areas in response to periodic rapid visual stimulations (RVS). SSVEP responses have been widely used for fundamental research purposes in the field of cognitive neuroscience to investigate overt/covert attention, face processing, reading and working memory among others. The rapid onset of the sustained responses and the high discriminability following a single stimulation has also established SSVEPs as a ubiquitous paradigm for the development of reactive Brain-Computer Interfaces (BCI). Despite their potential benefits, SSVEP paradigms are visually intrusive and distracting for volunteers, potentially leading to decreased task performance and eye strain due to visual fatigue. Moreover, SSVEP based BCI requires long and tedious calibration phase for the end-users. The aim of this talk is to show that it is possible to design innovatibe visual stimulation to overcome these issues. We will present our latest research which involves using periliminal periodic visual stimuli to investigate attention and vigilance. Furthermore, we will discuss the benefits of using new types of visually comfortable aperiodic visual stimuli in conjunction with convolutional neural networks, which can significantly reduce BCI calibration time, improve classification accuracy and enhance user experience. Such an approach enable the implementation of transparent and “dual” active-passive BCIs to enhance the symbiotic relationship between humans and machines.

- Ng Lai Xing (A*STAR): “Towards human-like memory for Augmented Visual Intelligence”

Augmented Visual Intelligence (AVI) aims to understand and provide cognitive assistance to users and their tasks using visual perception and cognitive science. In particular, as wearable devices become more prevalent and the amount of data (images, videos, sensor data) will increase exponentially. In this talk, I will propose a research on modelling human-like memory to solve the issues of representing, storing and retrieval of memories for AVI applications.

- Marwen Belkaid (CYU): “Non-verbal behavior in autonomous social robots: how to generate it? how to evaluate it?”

Human interactions rely heavily on non-verbal communicative behaviors, also called social signals, such as facial expressions, gaze, and gestures. Substantial effort has been put to design solutions aiming to recognize and interpret these behaviors in humans. In this seminar, I will focus on a complementary endeavor seeking to implement such communicative behaviors in robots. This endeavor faces two challenges: 1) Generating meaningful social signals in robots, and 2) Assessing human response to robot social signals. Building on my previous research, I will present some ideas about how to address these challenges in tomorrow’s autonomous social robots. Then I will conclude by underlining the relevance of this research for the issue of explainability and trustworthiness in robotics and human-robot interaction.

Afternoon session

2-4 pm – Presentations of IPAL partners

- Laplace Research Institute by Pascal Maussion

- IRIT Research Institute by Jean-Marc Pierson

- ETIS Research Institute by Lola Canamaro

- ENAC School by Patrick Senac

4pm to 4:20pm – Break

4.30-6:30 pm – One option to choose between:

* Visit of the Neocampus research program

Gathering and departure from the IRIT cafeteria with Mickael Martin at 4.30 pm

neOCampus is an experimentation and innovation ground located on the Rangueil science campus, open to all the region’s research laboratories and partnerships with industry as it aims is to create synergies in research and innovation between laboratories and/or industry. The main areas of research concern energy, water and air, quality of life both inside and outside buildings, biodiversity, sustainable development, mobility and eco-citizenship, as well as interdisciplinarity for the design of innovative products and services.

* Visit of the ENAC School (https://goo.gl/maps/A92o2NuYv5e18oJF6?coh=178572&entry=tt)

Gathering and departure from the IRIT cafeteria with Patrick Senac at 4.30 pm (please note there will be a 15 min walk between IRIT and ENAC)

ENAC

June 30th at IRIT lab (Univ. Toulouse 3 campus)

Online meeting room: Click here

https://cnrs.zoom.us/j/95241428368?pwd=d2VtUUh2MEN2SG90QTJOZm5QKytIZz09

ID de réunion : 952 4142 8368

Code secret : z6FDe0

Onsite meeting: IRIT Auditorium, IRIT, Cr Rose Dieng-Kuntz, 31400 Toulouse

How to get there:

Ligne B to Metro Université P. Sabatier /

https://goo.gl/maps/Tyct6fCgNDYrxo8b7

Google maps: https://goo.gl/maps/7VJBPZGTdgWW7Ceg9?coh=178572&entry=tt

Morning session

9am to 10am – Theme 4 – Data Sciences & Applications

Session chairs: Bogdan Cautis (NUS, CNRS@CREATE, Université Paris-Saclay) & Stéphane Bressan (NUS) – Introduction to theme 4

3 scientific talks (20 min each) by

- Savitha Ramasamy (A*STAR, online): “Continual Learning of non-iid data”

- Teo Sin Gee (A*STAR): “AI for Cybersecurity, Cybersecurity for AI”

In the past decades, cybersecurity threats have been among the most significant challenges for social development resulting in financial loss, violation of privacy, damages to infrastructures, etc. Due to the complexity and heterogeneity of security systems, cybersecurity researchers and practitioners have shown increasing interest in applying machine learning/deep learning methods to mitigate cyber risks in many security areas, such as malware detection and essential player identification in an underground forum. In this talk, we will introduce a few ongoing AI for Cybersecurity/Cybersecurity for AI works in the cybersecurity department, Institute for Infocomm Research, A*STAR, Singapore.

- Nistor Grozavu (CYU): “Unsupervised representation learning for multimodal data”

10am to 10:20am – Break

10:20am to 11:40am – Theme 5 – Efficient AI

Session chairs: Benoit Cottereau (CNRS) – Introduction to theme 5

4 scientific talks (20 min each) by

- Benoit Cottereau (IRIT): “Event-based cameras and spiking neural networks for energy efficient artificial vision systems”

In this talk, I will show how event-based cameras and spiking neural networks (SNNs) can be used to design energy efficient artificial vision systems.

- Camille Simon-Chane (CYU): “Machine Learning Approaches for Event-Based Cameras”

Event-Based sensors, sometimes called ’silicon retinas’ are composed of asynchronous, independent pixels which record changes (events) in their field of view. These high-speed and high-dynamic range sensors are of interest in an increasingly large number of applications, including autonomous navigation.

However, the computer vision tools developed over the past decades for image processing, in particular deep-learning, are not adapted to intrinsically exploit the advantages of event-based sensors.

This talk will present ongoing work to harness the development of deep-learning for event-based signals. In particular, we will describe the VK-SITS event-representation which provides robust compression of event-streams for classification tasks.

- Nicolas Cuperlier (CYU): “Neurorobotic models for spatial cognition and navigation”

This presentation will briefly outline the recent work of the Neurocybernetics team at ETIS in the field of spatial cognition and navigation in mobile robots. We will begin by presenting the neurorobotic approach, which involves designing and integrating neural models into robots that mimic the neural circuits and mechanisms underlying spatial cognitive functions in the mammalian brain. We will then present some results illustrating how these bio-inspired models can help to meet the challenge of autonomous robotic navigation.

- Alex Pitti (CYU): “Digital computing in neural networks based on entropy maximization for intelligent systems”

11:40am to 1pm – Poster teasing session

Session chair: Caroline Chaux (CNRS)

Young researchers will introduce their works before presenting their posters in the afternoon (5 minutes maximum each – ~3 slides maximum)

16 teasers by:

- Yash Pote:”Testing High-Dimensional Samplers Scalably”

- Yacine Izza: “On computing Probabilistic Abductive Explanations”,

- Travis Seng: “Multimodal analysis of educational video content for learning applications”

- Gosh Bishwamittra: “Fairness and Interpretability in Machine Learning: A Formal Methods Approach”

- Yize Wei: “DroneBuddy: Towards Assistive Drone for Person with Visual Impairment”

- Baljinder Singh Bal: “First Steps Towards a Socially Competent Soft Robotic Assistive Arm”

- Peisen Xu: “Improve Pilot Situational Awareness with Control Feedbacks on OHMD”

- Xiaodan Chen: “Learning structures from sequences”

- Yue Guo: “Learning parametric Koopman operators for prediction, identification and control”

- Srecko Durasinovic: “The Christoffel function for supervised learning: theory and practice

- Shiqi Wu: “Black-box model integration by iterative projection”

- Pratik Karmakar: “Shapley values in Data Valuation and Machine Learning”

- Félix Chavelli: “Discovering State Variables from Experimental Data”

- Alan Gany: “Representation learning for incomplete databases”

- Mario Alexis Emilio Michelessa: “Generation of Plausible Datasets using Diffusion Models”

- Yannis Montreuil: “Learn to defer SVM: A first approach”

Afternoon sessions

2pm to 3pm – Poster session

(poster template available on here)

3pm to 3:20pm – Break

3:20pm to 4:40pm – Theme 1 – Explainable and Trustable AI

Session chairs: Blaise Genest (CNRS@CREATE) & Hwee Kuan Lee (A*STAR) – Introduction to theme 1

4 scientific talks (20 min each) by

- Abhik Roychoudhury (NUS): “Trustworthy code generation from Large Language Models”

Automated program repair can be seen as automated code generation at a micro-scale. The research done in automated program repair is thus particularly relevant today with the movement towards automated code generation using tools like Codex and ChatGPT. Since automatically generated code from natural language descriptions lack understanding of program semantics, using semantic analysis techniques to auto-correct or rectify the code is of value. In this talk we will focus on automated improvement of automatically generated code from Large Language Models.

- Hwee Kuan Lee (A*STAR): “Simulating Physics at the molecular level with Neural Networks”

In this talk, we will discuss several methods of using Deep Neural Networks for simulating physics at the molecular level. We will demonstrate increase of simulation speed while maintaining accuracies. Finally we will discuss some key challenges in this field of study.

- Arsenia Chorti (CYU): “Trustworthy 6G”

Trust and trustworthiness are key value indicators of the forthcoming sixth generation (6G) of wireless. In this talk, we will discuss aspects related to trustworthy radio access networks and the role of physical layer security and artificial intelligence towards trustworthy 6G.

- Michele Linardi (CYU): “Causally Faithful Explanations of Deep Learning Models for Multivariate Time Series” (online)

4:40pm to 5:20pm – Theme 3 – Natural Language Processing

Session chairs: Farah Benamara (Univ. Toulouse 3) & Jian Su (A*STAR) – Introduction to theme 3

2 scientific talks (20 min each) by

- Su Jian (A*STAR): “Knowlege graph construction, application and beyond”

- Liu Zhengyuan (A*STAR): “From Generic to Controllable Abstractive Dialogue Summarization”

As one of the fundamental tasks in natural language processing, text summarization is to condense the source content into a shorter version while retaining essential and informative content. For practically usable abstractive summarization, it would require context comprehension, domain generalization, reasoning, and the capability of fluent and coherent language generation. Furthermore, compared with monologic documents, human-to-human conversations are interactions among multiple speakers, they are less structured and are interspersed with more informal linguistic usage, and such dialogical features make summarizing conversations more challenging. In this talk, I will present an overview of recent computational approaches to generic and controllable abstractive dialogue summarization, and share our insights on the challenges and solutions, which can shed light on other tasks in controllable language generation.

5:20pm to 6pm – Discussion and concluding remarks